1) I gotcha. I think. So, if a test shows that the probability is low, and thus should be thrown out, yet none of these ferment temps experiments (except for perhaps one) have reached that threshold of low enough to throw out, then maybe we should be *at the very least* retesting?

I think you're still missing something that I completely missed until AJ said it about 2-3 times lol...

The hypothesis being tested is the null hypothesis: "These two beers produced with different methods are indistinguishable." That is the hypothesis we're TRYING to throw out during all this testing.

So if something doesn't achieve significance, we don't throw out the positive hypothesis of "fermentation temps matter", we fail to throw out the hypothesis "fermentation temps have no impact on the finished beer."

It's hard to wrap your mind around that distinction, but once you do, it all falls into place.

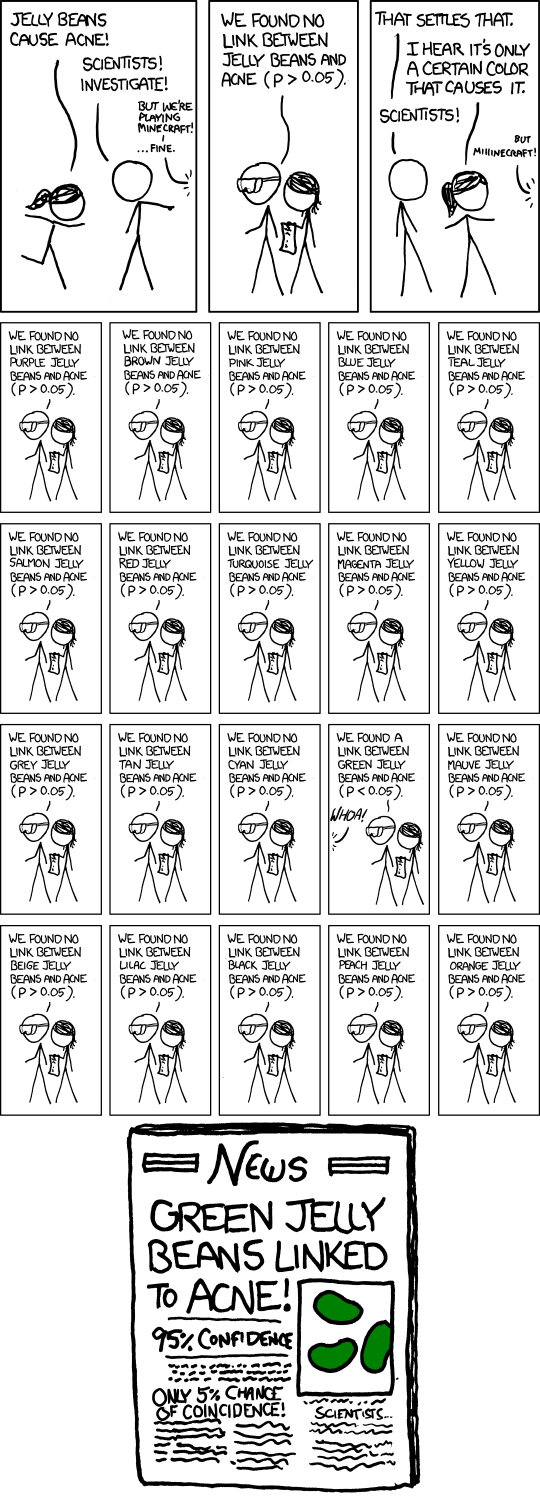

When we say something "didn't achieve significance", it's referring to a commonly accepted threshold of p<0.05, which is actually a pretty stringent criteria. When we say that it didn't achieve significance, we are not throwing out the positive hypothesis, i.e. we are not proving they're distinguishable, but we are not declaring them indistinguishable. We're

failing to prove beyond a certain confidence that they're distinguishable.

If you have, say, 36 tasters, you would expect pure guessing to result in 12 (33%) tasters selecting the odd beer. You would require 17 to achieve p=0.058 (close to significance) and 18 (50%) to achieve p<0.05 (actually p=028) to declare that the test was "statistically significant".

But what if you only get 16? That's p=0.109. We would declare that not statistically significant. But it's not 12 (or even less). So you're in a quandary. That result isn't enough to achieve significance, but it's also hard to accept the null hypothesis as true. You have to weigh the odds that the variance of 4 tasters is random chance (null hypothesis true) vs the odds that the variance of 4 tasters is real (null hypothesis false, but the beers are not distinguishable enough to achieve p<0.05).

The simple fact is that p<0.05 is to some degree an arbitrary threshold. And that sample size is important, because a sample of 20 tasters requires 11 (55%) to get to p<0.05 but a sample of 200 tasters requires only 79 (39.5%) to get to p<0.05.

The percentage difference relative to guessing to declare significance is dependent on sample size.

This was my point with the meta-analysis. I looked at two things across a bunch of experiments testing the same variable:

1) The "error", i.e. the results relative to chance, always pointed in the same direction. It was ALWAYS >=33% of tasters selecting the odd beer, but the results weren't strong enough to reliably achieve significance. If the "error" relative to guessing was both positive and negative, I would have a lot more difficult time improving the significance by adding the experiments together.

2) If the error is always the same direction but the experiments don't achieve significance, I assume that's a sample size problem. I.e. "these beers are different but not different enough that it's easy to detect." By aggregating the experiments, I "create" a larger sample size, and with a larger sample size, I need a lower percentage correctly selecting the odd beer to declare p<0.05.

2) Ok, so how improbable do they need to be before you decide that for practical purposes you should say they are not indistinguishable?

Ay, there's the rub...

I'm personally convinced based upon what I've done here in the meta-analysis. I have rejected the null hypothesis, that ferm temp doesn't matter. (Preference is something I am still unsure of, but that's a different issue).

applescrap is not. He thinks I'm basically searching for numbers in support of my bias (that ferm temp matters), which is certainly possible. And I suspect he thinks it affects the beer, but that it's not a sizable enough effect to worry about. He's also pointed to the preference numbers in some tests for warm ferment as evidence that even if the two are different we can't categorically say "cool ferment is better" in all instances, which is truly what we're trying to discover.

mongoose IMHO may not be convinced. He has legitimate concerns about the quality of the tasting panels and the methodology of the study itself that make him doubt that the original studies are good enough. As I pointed out elsewhere, even if my meta-analysis was perfectly conducted, it doesn't correct for methodological errors in the original studies.

I'm not sure of AJ's position, actually, because as he's said he hasn't really read the bulk of the experiments. He's been invaluable to all of us by providing background on testing methodology. I would say that he's likely rejected the null hypothesis as well based on what he's said, but trying to say anything beyond that statement isn't supported by what I've read that he's posted in this thread.

So there you go. 4 different posters who have been arguing this stuff vociferously, and we could have as many as 4 different interpretations of the data.

-----------------------------------

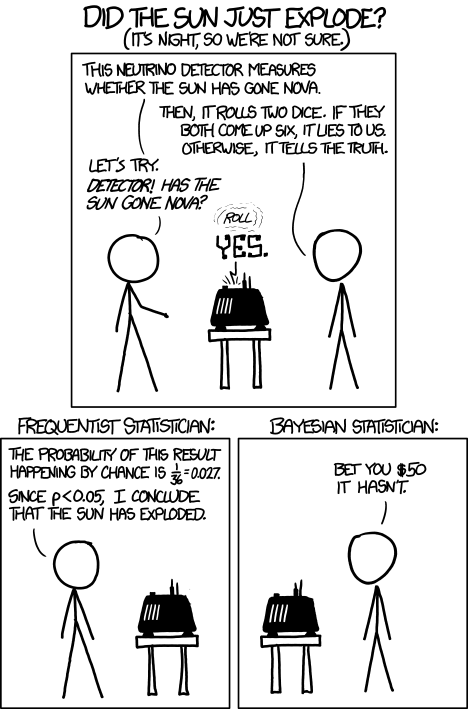

BUT, and this is the important thing, AJ made a very useful point. In all these things we're talking about confidence and error, and how they are balanced against the cost of being wrong (either way; the cost of additional equipment/process to do things a certain way vs the cost of making sub-par beer).

I use fermentation temperature control. I really like the beer I make. I've been doing this 10+ years and based on my process, I believe I'm making commercial-quality beer. I've already got sunk costs into the fridge and temp controller, so the only marginal cost of temp control is electricity and floor space in my garage. Even if I'm wrong, I see no reason to change my process because whatever I'm doing is working. So I'm just geeking out on statistics, numbers, and debate, not actively looking to change my own process anyway.

To answer your questions about how improbable it needs to be,

the answer is up to you. If you're not using temp control, do the results of the experiments justify in your own mind that you should start using temp control? If you're already using temp control, do the results of the experiments justify in your own mind that the electricity and space savings are justified to get rid of your temp control setup? That's all that matters.

![Craft A Brew - Safale BE-256 Yeast - Fermentis - Belgian Ale Dry Yeast - For Belgian & Strong Ales - Ingredients for Home Brewing - Beer Making Supplies - [3 Pack]](https://m.media-amazon.com/images/I/51bcKEwQmWL._SL500_.jpg)