Here's a bit more perspective on the confusions caused by Brulosophy's misinterpretation of significance (p). There are two types of problems of interest.

- We make a change in materials or process in the expectation that the beer will be improved. We hope that most of our 'customers' will notice a change.

- We make a change in materials or process in order to save time and/or money. We hope that few, if any, of our 'customers' will notice a change.

'Customers' is in quotes because while in the case of a commercial operation they are literally customers, in the home brewing case they are our families, friends, colleagues or brew club members (or all of these).

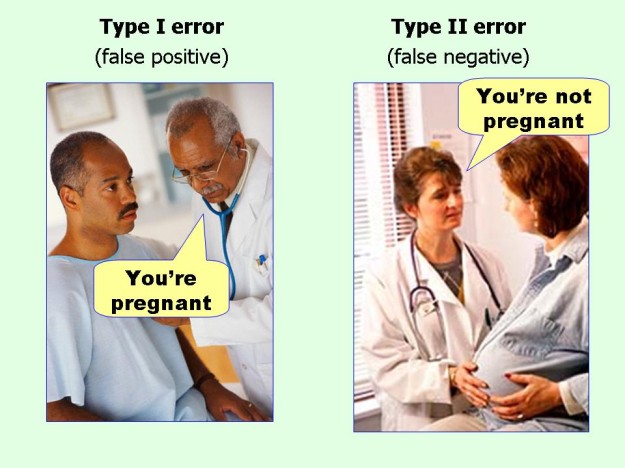

The two cases are quite distinct and call for different interpretations of triangle test results. In the first case we do not want to be misled by our interpretation of the test data into thinking that there is a difference in the beers when in fact there isn't. We want the probability of this kind of error (Type I) to be quite low. In the second case we don't want to be misled into thinking that there is no difference when in fact there is. We want the data to imply that the probability of this kind of error (Type II) is quite low.

Suppose we have two lagers; one brewed with a triple decoction mash and one brewed with no decoctions (melanoidin malt instead). Further suppose that these beers are presented to a panel of 40 and 14 of these correctly detect the difference. Brulosophy would plug these numbers into the p formula or table and come up with p = 0.47 and declare the test to be not significantly significant and those with a passing familiarity with statistics (and, unfortunately, quite a few well acquainted with them too) would declare the test to be of no statistical significance and thus flawed.

Thus far we have determined that if the null hypothesis is true (decoction makes no difference) the probability of getting 14 or more correct answers is 47%. A brewer considering going to the extra trouble of decoctions would like to see that the probability of getting 14 under the null hypothesis is very much lower than that which would mean that the null hypothesis isn't the correct one is low and that, therefore, the alternate hypothesis (that it does make a difference) is true. These data don't show that so this brewer would probably decide that the evidence that decoction makes perceptible change isn't very strong and would probably decide that he should not bother with it. The data is not that useable to him but is still sufficiently so for him to decide on a course of action. This does not mean the data aren't significant and should be tossed on the scrap heap while the investigators wring their hands trying to discover what they did wrong.

To see this we look at the data from the perspective of Item 2, i.e. of the brewer that has been doing decoctions and wants to see if he can get away with dropping them in favor of melanoidin malt. He looks at the significance of the test with respect to Type II errors. He assumes that there IS a difference and wants to determine what the probability of getting the test data under that assumption would be. Things are not quite symmetrical here as there is only one null hypothesis which is that the beers are not differentiable whereas there are an infinite number of alternate hypotheses e.g. that 1% of the population can differentiate them, that 2% can differentiate them, that 2.47651182% can differentiate them and so on. So he decides he'll drop decoctions if 20% or less of his 'customers' can detect a difference. He now computes the probability of getting 9 or fewer correct answers in a beer that is 20% detectable (he can do the computation with the spreadsheet I offered earlier) and finds that probability to be 0.0495. He can, therefore, be quite confident from these data that he can drop the decoctions and thus the same test which was not statistically significant to the guy considering adding decoctions to his process is now seen to be significant to the guy considering dropping them.

To return this to the original question: a pro brewer who is aware of this aspect of the triangle test might extract some useful guidance from the Brulosophy tests (though he would want to see some improvements in the way they conduct them). Finding a pro brewer who has this awareness, however, would probably not be easy. You'd have to hang out in the hospitality suites at ASBC or MBAA meetings. The guys you'd find who knew about this probably wouldn't be brewers but rather the professors and lab rats that go to these things. And I'll note that the ASBC's MOA mentions nothing of Type II error (though I have not seen the latest version of it).

It is not like I'm not putting 4 or 5 hours aside on brew day and I have always mashed for at least an hour, usually longer because I forget to get my strike water hot early. Anyway, thanks for the reminder.

Question: If I want more unfermentable sugars is a shorter mash an option? I understand a higher mash temp (156-160f) does this, but it would be good to know.

![Craft A Brew - Safale BE-256 Yeast - Fermentis - Belgian Ale Dry Yeast - For Belgian & Strong Ales - Ingredients for Home Brewing - Beer Making Supplies - [3 Pack]](https://m.media-amazon.com/images/I/51bcKEwQmWL._SL500_.jpg)