You're headed my direction? As in process or geography?

I fiddle much less with water and pH than I used to. Didn't see much benefit.

You're headed my direction? As in process or geography?

I fiddle much less with water and pH than I used to. Didn't see much benefit.

If you are fortunate enough to have water that comes out of the tap with low alkalinity and a blend of the stylistic ions that suits your taste vis-a-vis the styles you like to brew and your brewing practices are such that mash pH falls into the right band then there wouldn't be much benefit to tweaking your water. If, OTOH, you are like most your failure to appreciate the benefits would cut you off from the opportunity to dramatically improve your beers.I fiddle much less with water and pH than I used to. Didn't see much benefit.

I have never added alkalinity to brewing liquor. It would seem to me that ones recipe would be highly suspect and that the calcium salts should in that case be added to the boil.

I have never added alkalinity to brewing liquor. It would seem to me that ones recipe would be highly suspect and that the calcium salts should in that case be added to the boil.

No, not at all.A.J., was I off base in my post #36 above?

Yes. A kg of a 150L Briess crystal measure by Kai is, to pH 5.5, equivalent to 4 mL of 23 Be' HCl. A kg of 600L Crisp black malt I measured is equivalent to 5.2 mL of that same acid (for reference a kg of sauermalz is equivalent to 28 mL of the 23 Be' HCl). A kg of the 600L malt would require 63 mEq of protons to be absorbed to get it to pH 5.5. At 0.9 mEq/mmol from bicarbonate that's 70 mmol which is 70*84 = 5880 mg (5.88 grams) of baking soda. But if you mashed a pound of it in water of 0 alkalinity and 2.5 mEq/L calcium hardness with 9 lbs of a typical base malt you would arrive at pH 5.5 and find that it only delivers 29 mEq protons and the base malt absorbs 34. Thus unless you get up into higher colored malt percentages you aren't going to need to add alkali.Are not some higher end Lovibond dark roast malts extremely acidic, and would not their use (as opposed to tamer roast barley) more likely necessitate the addition of additional alkalinity?

There it is!Some of these malts (if 1 lb. was to be mashed alone) would necessitate the addition of perhaps 2 to 4 g. of baking soda all by themselves to hit pH 5.4-5.5 in the mash. Of course the alkaline base malts are utilized to counter this...

How does a judge know that reduced acridity and sharpness came from increased alkalinity as opposed to a more sensible grain bill?Having judged far too many bad beers, a fault that I more commonly find with the wider usage of RO and distilled brewing water, is excessive sharpness and higher acridity (and sometimes: thinness) in some porters and stouts. I find it very refreshing to find a porter or stout that was brewed with more alkaline water and the sharpness and acridity have been moderated away so that you can actually enjoy elements such as coffee and chocolate.

Just to be sure: I don't want my remarks to be taken as being supportive of low mash pH. If you need alkali to hit proper mash pH, use it.The other significant benefit of avoiding an overly low mashing pH is that body and mouthfeel won't be lost to excessive proteolysis.

Unfortunately, a brewer can't escape the pH lowering effect of calcium salts by reserving them for the boil. While it is helpful to avoid an overly low mashing pH since that tends to enhance fermentability of the wort and make the resulting beer thinner than intended, adding those salts to the kettle will cause the kettle wort pH to drop...maybe lower than you might prefer.

Mashing water alkalinity can be an asset in brewing some beer styles.

..... With RO water and enough gypsum or CaCl2 to get 50 ppm calcium a typical mash of 90% base malt and 10% roast barley is going to give you a mash pH around 5.5. Change that to 80%/20% and the mash pH drops to around 5.4. Now mash those same grists with water of 1.75 mEq/L alkalinity and the pH's rise by about 0.1 to 5.6 for 90/10 and 5.5 for 90/20. In the 90/10 case, which would be a miuch better beer to my way of thinking, one might be considering acid addition rather than alkali.

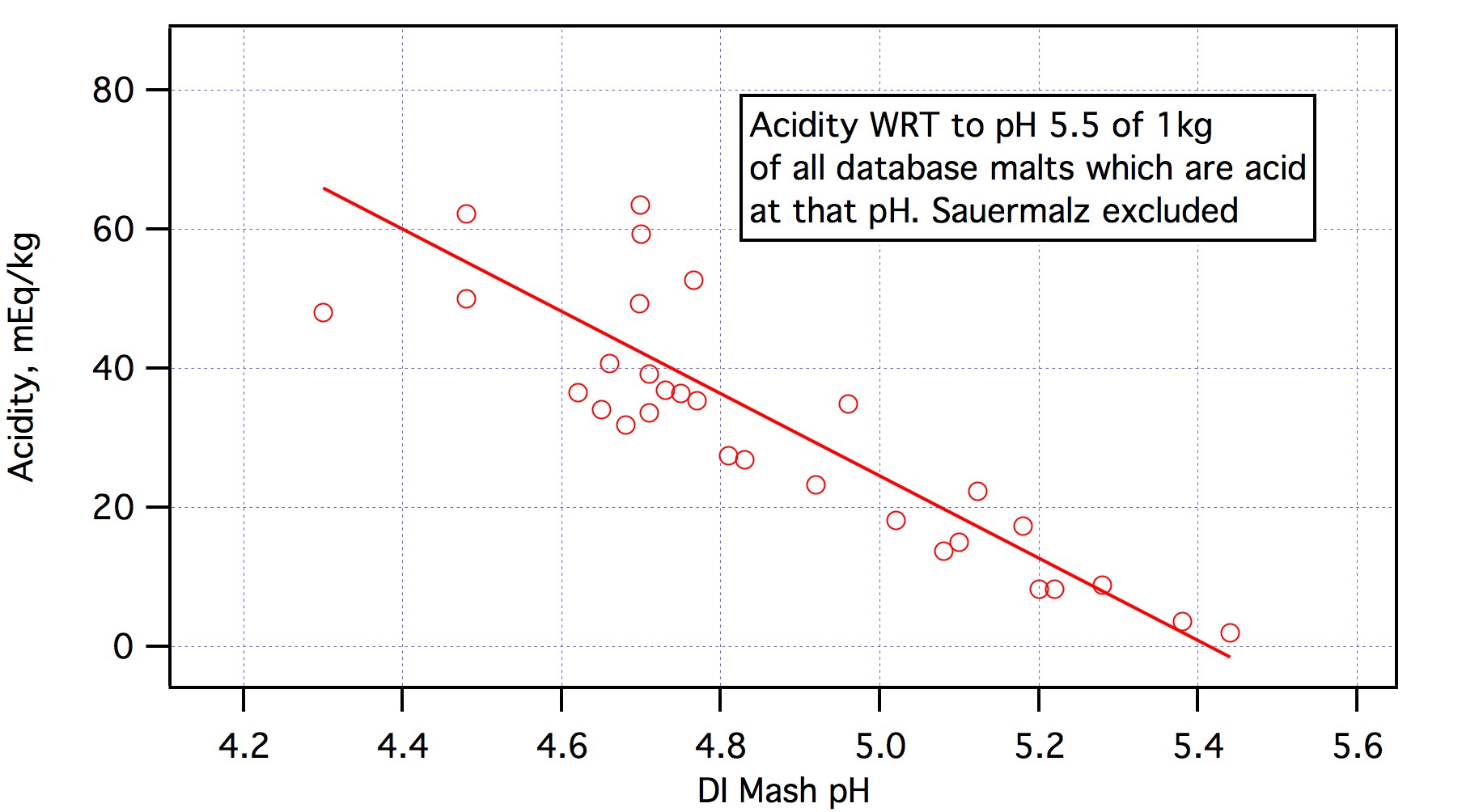

But I've recently discovered from Briess data that Roast Barley (unmalted) is much less acidic than similar L colored malted barleys. Per Briess data even at 600L Roast Barley does not go lower than about 4.65 to 4.55 DI_pH (call it 4.6), but 600L roasted 'Black' (or Black Patent) malted barley can go as low as between 4.3 and 4.24 DI_pH. That seems to be a huge difference with respect to acidity. For those I get with MME version 2.60 (using same criteria as above):

Am I presuming too much acidity for a 600L roasted/malted barley in the DI_pH range of 4.3 to 4.24,

.... And you will find at lower pHDI malts with less acidity than some malts with higher pHDI.

Color was not considered in making the plot. It is based only on pHDI, buffering and the acidity to pH 5.5 based on those two parameters. I don't even know what the color of most of those malts is.Not all, but I suspect that some of the scatter may be due to the difference between a deep roasted malts "reported" Lovibond color, and its "actual" Lovibond color.

Color has nothing to do with this,The same sort of lot to lot color (and therefore acidity) variations can be seen to some degree with crystal malts also.

Acidity is approximately linear with pH over the pH region of interest to brewers. It is calculated from acidity = a1*(pH - pHDI) + a2*(pH - pHDI)^2 + a3*(pH - pHDI)^3. As a2 and a3 are usually small in magnitude relative to a1 and pH - pHDI < 1 in most cases acidity is nearly (but not quite) linear with pH.But since acidity is logarithmic with respect to pH,

At this point it would probably be prudent to ask "What is your definition of acidity?" I suspect when I use the term it means something substantially different from what you mean by it.And that is before the lots acidity is even considered.

While one can certainly calculate the acidity of the malt WRT a particular pH (if he has pHDI and buffering data for the malt) and plot that against a the color of the malt I don't see any value in that. Kai published some plots of pHDI vs color and I seem to recall that Riffe had some plots of buffering vs color. The correlations certainly aren't tight enough to predict pHDI from color with any accuracy nor a1 from color with any accuracy let alone a1*(pH - pHDI) i.e. the acidity.There may even be inherent acidity differences with respect to color between caramel and crystal malts.

Does this hold true after separating unmalted deep roast from malted deep roast barley? I believe there is a big difference between them. And then there are the deep roast grains that are wheat based. And also those that are modified to give deep color but not pass any acrid roast/burnt taste to your recipe. Who knows where these deep roast types fit into the acidity scale mix, or even if they do fit in?

To borrow a quote from Arthur Schopenhauer.... 'Talent hits a target no one else can hit. Genius hits a target no one else can see.'

At this point I’m convinced the approach taken for Gen 2 pH predictions will include a combination of DIpH, color and actual mash pH values in order to increase pH prediction accuracy. At least for the foreseeable future.

Not at all. The color vs acidity relationship must stand on it's own merits. If you can find a way to tighten the correlations (e.g. by grouping) beyond what other investigators have found then all I would have to say is "Great!".A.J., I know that you are working hard to literally deny and thus destroy the malt color vs. acidity relationship in order to promote the superior value of testing for DI_pH and then for 3 titration values (the superiority for this approach of which is undeniable),

It has nothing to do with me. There is a correlation. It just isn't a very good one. I only caution people to be wary of using the fits as predictors of malt properties.but since for each maltster it all starts out as essentially the same small set of malts (variant by cross bread inheritance, or via direct induced GMO genetic traits, or by regional soils, or regional rain and temperature seasonality), and darker malts clearly have more acidity when considered along with an added process (or malt class) relationship to said acidity (wherein for example caramel malts by class and process are well more acidic in relation to their color than are roast malts), even you can not fully eradicate the correlation of color to acidity

Exactly.(by classification, as in caramel, base, roast, brown, Munich, Specialty or toasted, wheat or barley, malted or unmalted, etc...), though for years you have tried to talk this correlation down, and I think I understand why. The why being that the R value of correlation is at best only about 0.8 due to malt data scatter.

At this point "my software" should be thought of as a set of functions which can be installed in Excel (it may be offered as an Add In) which will much simplify the process of preparing brewing related spreadsheets. In addition to simplifying life these functions offer a robust implementation of the "proton condition" approach to acid/base chemistry.In the end, in order to market a software package that can be used by those who will not ever DI_pH measure and then also three-fold titrate each and every individual lot of purchased malt/grain, a similar color relationship to acidity by malt class must sneak into even your software at some juncture, even if somehow kept low key or under the rug.

You are entirely focused on the malt models. The greatest defect of the first generation programs is that they try to force linearity on a non linear problem and just plain sloppiness with regard to the treatment of of weak acids and bases. While one can get away with this because the consequences are small errors there is really, in today's world of previously undreamed of computing power, no reason to continue to tolerate those errors. Would you run your software on a 16 bit machine? They also use bad malt models but I readily agree that practically speaking, there may be no alternative. The difference between first and second generation software is that given good malt data a first generation program can give a bad answer but a second generation program, given good malt data, will not. In many 1st gen. you feed in color or type and the program tries to figure out what the pH will be. In the better ones one can, at least, input pHDI but one cannot enter anything about the buffering properties of a malt. How can a program figure out the pH of a mix of acids, bases and malts if it doesn't know the buffering properties of the malts? It can't so it has to guess at what they may be. This merges the malt model with the computational model. They are separate things.Thus the greatest "initial" defect of the gen 1 programs (that being that there is too much scatter between various lots and across the various maltsters process differences, and across seasonal or regional acidity differences in malts, or even genetic trait differences between malts due to cross breading, or now active GMO manipulation) must at some juncture inevitably pop up for your gen 2 software.

Certainly many will be baffled and some may become acolytes based on faith but that doesn't matter. What matters is whether the 2nd gen software is indeed superior and it clearly it is. The ability of the software to do the calculations properly is quite independent of the malt model problem. Feed these functions malt data that does not represent what the brewer has in hand or give it bad water data or incorrect strength information on the available lactic acid and it will give you bad predictions/recommendations. But at least in the second gen. approach the models have been separated and the computation part is solid.That your software is leagues above others in its underlying complexity and coding will assuredly baffle and impress the unawares masses who will believe (without actually knowing or understanding why no-less) that such complexity of coding is in and of itself somehow an indication of inherent accuracy,

Does everyone who used the STDEVP function in Excel know what it does? No, but hundreds of thousands of people use it every day because they are confident that given a set of data it returns the standard deviation. It is the same here. One need not understand the details of the Henderson-Hasselbalch equation to calculate the charge on an acid via an invocation of the QAcid function. It, is, of course, tremendously helpful to both the STDEVP and QAcid user to understand what those functions do and how they work. A person who doesn't understand what standard deviation is probably won't find the STDEVP function very useful and a user who doesn't understand the role of charge in acid base problems probably won't find QAcid very useful either.as well as clearly draw the interest of those who specialize in the latest cutting edge software coding abilities (likely no-less also without actually understanding why it should work in the end, but merely bringing to the table the ability to make it work, as well as look good),

A developer using the second gen functions may well choose to do things that way as it certainly makes things convenient but it is up to him how he wants to have his user choose malt types. Using myself as a prototype developer and the spreadsheet I've made available to an few guys as an example: malt choice is any malt that I have any knowledge of plus any malt for which the user has pHDI and buffering numbers. In trying to help the fellow with the stout I was forced to choose from the available selections and pick the malts on which I have data which, in my opinion, are likely to be the most like the ones he used. My choices influence the outcome, of course. He said he used Maris Otter. There are lots of Maris Otter out there. I've tested two and they are quite different. One has, with respect to pH 5.5, 84% more acidity. But WRT pH 5.6 it has 174% more acidity (what I love about the 2nd gen approach is how easy it is for me to get numbers like this). This is why I continually tell the guys that are interested in coding this up (as spreadsheets, as iPhone apps, as Web based calculators) that the challenges don't lie in the coding - that's pretty simple - but in the problem of making malt data conveniently available. In this I think we are in complete agreement. But I am firmly of the opinion that this should be done using the second genertion calculations approach as they are sounder. Empiricism has been moved out of the computational model and is completely in the malt model domain where it belongs.but in the end your product will inevitably make educated guesses related to malt color by malt class along the same lines as for all of the others.

The more classes and subclasses you have the more complex the access becomes. How big a tree can a user tolerate before he gets to the point where he'd rather just pick a malt from a list?The real advantage for any of these software packages comes from breaking down malts by maltster, region, process, seasonality, etc... rather than lumping all of them into a small handful of classes meant to merely average across all of such variants.

Well no. The first generation approach has the inherent inaccuracies imposed on it by linearization. The problems with mishandling of weak acids can be fixed. As an example Bru'n Water now correctly handles bicarbonate additions.This same thing could be done for the gen 1 products to raise their potential for accuracy.

The second generation coding is certainly not spectacularly baffling nor complex. It is quite simple. OK, it uses Newton - Raphson and when people see mention of that they throw up their hands. But they were all taught Newton-Raphson in high school and have simply forgotten it. This is high school stuff!And they could do it without all of the spectacularly baffling and complex coding.

But how do you get this "hard data". You have to go to the lab and titrate. A malt is characterized by its titration curve. Perhaps one can find a grouping scheme that shows tight correlation between buffering and pHDI but you won't know that until you obtain hard buffering data.All they need is to incorporate hundreds of "individual" malts "hard" data instead of merely averaging across a small handful of (sometimes inferred) malt "classes" as they presently do, even though in the end there will still inevitably be myriads of guesses as to whether or not the malt in the hand of the brewer will actually match to the malt selection choice residing in a coded database.

Yes, just as for my software. We still have the malt problems but we don't, with "my software" have to live with the computational problems as well.Just as for your software...

That's easily done. I did it in a couple of weeks. Toss the empirical approach and go to the charge balance model. This requires iterative solution which is easily implemented with Newton-Raphson.But on top of this, all of the gen 1 software (mine being no exception) is rife with some level of errors that go beyond the "initial" color to acidity related poor 'R' correlation issue, and their programmers must work to squash all of such additional math-model errors.

Yes, it clearly is.This I hope is where your softwares true advantage currently lies.

The charge balance model is solid. You really can't argue with it (I say this with some trepidation as one of the foundations of science is to question EVERYTHING). If you get bad answer from a program based on the charge model thenBut in reality only time and hard correlation to real batches mash pH data will tell.

One of the main advantages of the Gen II approach is that you can use any or all of the above in arriving at a mash pH prediction. It depends on how you invoke the functions and on what you feed them. As for the quote - Wow!. I'm afraid I can only sign up for the talent part if that. I could have, and should have, done this years ago but was too lazy. I have had the privilege of working with several geniuses in my career. They are amazing people. I ain't one of them. One of most interesting things about them I noticed is that they don't sleep.To borrow a quote from Arthur Schopenhauer.... 'Talent hits a target no one else can hit. Genius hits a target no one else can see.'

At this point I’m convinced the approach taken for Gen 2 pH predictions will include a combination of DIpH, color and actual mash pH values in order to increase pH prediction accuracy. At least for the foreseeable future.