Ok, so I'm a bit of a tech nut. I've loved computers since I little (if you can call a Sinclair ZX Spectrum 16k a computer  ). But the point is, I'm starting to find a lot of uses for AI within my home and work life.

). But the point is, I'm starting to find a lot of uses for AI within my home and work life.

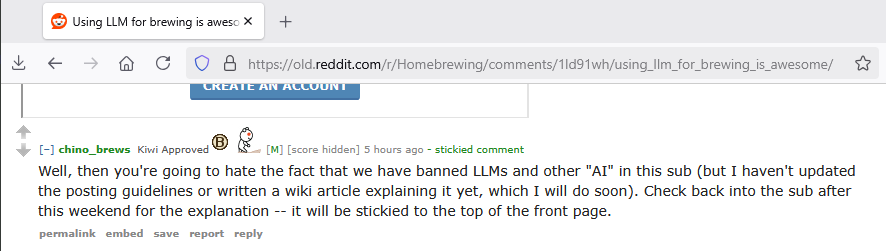

Has anyone else here been using AI to get help or provide information on brewing?

If you do, which AI engine do you use? The reason I ask this is I find that while ChatGPT is fast, it's also a lot less accurate, it scrapes websites and doesn't seem to sift out the answers well.

So I started using Grok (yes I'm aware of it's relationship to certain well known figures, but I'm not interested in that, just what the AI can help me with). It's a fair bit slower than other AI, however, I have found that due to the fact it has full access to X, it seems to be able to pull a _lot_ more detailed information about things.

The thing with AI that I have noticed and learnt is that in order to get the best response from it, you must make sure to tailor your opening statements properly.

If you ask a simple one line sentence, then chances are you're going to get a rubbish response back, which was unhelpful and a waste of your time. Then you may find you don't bother again since you got a bad taste in your mouth over the first attempt.

Here's a good example of a tailored opener:

https://grok.com/share/c2hhcmQtMg==_34b2e09d-aa94-4353-a79d-eec5a04e64a2

If anyone's had any experience using other AI engines, I'd love to know what you thought of them.

Has anyone else here been using AI to get help or provide information on brewing?

If you do, which AI engine do you use? The reason I ask this is I find that while ChatGPT is fast, it's also a lot less accurate, it scrapes websites and doesn't seem to sift out the answers well.

So I started using Grok (yes I'm aware of it's relationship to certain well known figures, but I'm not interested in that, just what the AI can help me with). It's a fair bit slower than other AI, however, I have found that due to the fact it has full access to X, it seems to be able to pull a _lot_ more detailed information about things.

The thing with AI that I have noticed and learnt is that in order to get the best response from it, you must make sure to tailor your opening statements properly.

If you ask a simple one line sentence, then chances are you're going to get a rubbish response back, which was unhelpful and a waste of your time. Then you may find you don't bother again since you got a bad taste in your mouth over the first attempt.

Here's a good example of a tailored opener:

If you want to see the response I got, which was quite extensive and gave me some great options, including one I hadn't thought of too, then here's the link to see it:Hello Grok,

I'm a home brewer in Phoenix, Arizona. The ambient temperature of the rooms in my house never goes below about 72F, which isn't conducive to brewing lager, as that requires much lower temps.

However, I would really like to try my hand at brewing a refreshing lager for summer time drinking. I don't want to buy another fridge or freezer to use as a fermentation chamber due to lack of space and also the cost of those.

I would prefer not to spend a lot of money on this, and it needs to be something that doesn't require met to attend it every few hours. The lower the maintenance the better.

With all that in mind, what options would you suggest for me?

https://grok.com/share/c2hhcmQtMg==_34b2e09d-aa94-4353-a79d-eec5a04e64a2

If anyone's had any experience using other AI engines, I'd love to know what you thought of them.

![Craft A Brew - Safale S-04 Dry Yeast - Fermentis - English Ale Dry Yeast - For English and American Ales and Hard Apple Ciders - Ingredients for Home Brewing - Beer Making Supplies - [1 Pack]](https://m.media-amazon.com/images/I/41fVGNh6JfL._SL500_.jpg)