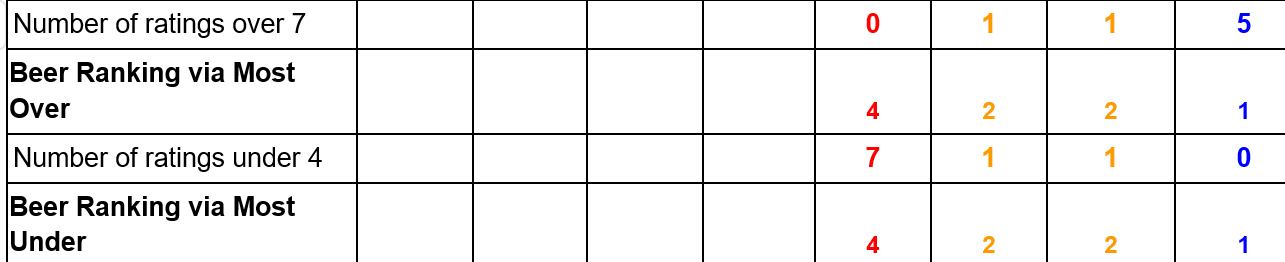

Yeah, not questioning the process for this round. I tend to think that factors like appearance and fermentation performance are valid factors that should be taken into account. I am more curious if the impact of the Clean Boil is more a slight reduction in haze or actually makes a perceptible impact on the character of the final beer. It would be great if you collected and shared that data.There was some discussion way up in this thread about the pros and cons of taking the visual away from the tasting. If the focus was only flavor, that would make sense but everyone drinks beer in clear glasses. How does process affect the beer in the glass with all things considered? I'm not suggesting that the clear vs hazy appearance didn't affect the overall rating. It probably did, because it generally does. I have enough of the beer left to have all the club's judges taste them blind next month and I'll publish that data when it happens.

I have been brewing BIAB almost 5 years. My process is often not much different than your DBDF batch. Sometimes my beers drop crystal clear quickly, and sometimes they hang on to a bit of haze that takes several weeks to drop out, though they do tend to be more clear than what many of my friends make.

![Craft A Brew - Safale S-04 Dry Yeast - Fermentis - English Ale Dry Yeast - For English and American Ales and Hard Apple Ciders - Ingredients for Home Brewing - Beer Making Supplies - [1 Pack]](https://m.media-amazon.com/images/I/41fVGNh6JfL._SL500_.jpg)