Thank you... exactly the point I was making.Right, but that wasn't the overall point, I'm simply agreeing with AcidRain in saying that simply treating your mash water to lower its pH to, say 5.2, isn't the proper way to get a mash pH of 5.2 once the grains are added. Both need to be taken into account. Again, I'll admit I misread the replies between Augie and AcidRain. I thought AcidRain was saying to only add water treatments after mashing in and taking a reading when he was actually responding to Augie believing that, from his past reply, he was only pre-treating his water with the sole intention of bringing it down to the mash pH he desired.

Rev.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PH Meter Replacement

- Thread starter sgraham602

- Start date

Help Support Homebrew Talk:

This site may earn a commission from merchant affiliate

links, including eBay, Amazon, and others.

ChocolateMaltyBalls

Well-Known Member

Having used various pH meters for lab work I think it's more important to find a durable meter and stick with it. Even with high end bench meters it's unlikely to get two of them to read the same. This one of those cases where precision is better than accuracy.

augiedoggy

Well-Known Member

correct... I actually dusted off my copy of "The New complete joy of home brewing the re reread the "advanced home brewing and water" chapter tonight... now i just need to figure out where and how to use the calculators and tools in brewers friend.Right, but that wasn't the overall point, I'm simply agreeing with AcidRain in saying that simply treating your mash water to lower its pH to, say 5.2, isn't the proper way to get a mash pH of 5.2 once the grains are added. Both need to be taken into account. Again, I'll admit I misread the replies between Augie and AcidRain. I thought AcidRain was saying to only add water treatments after mashing in and taking a reading when he was actually responding to Augie believing that, from his past reply, he was only pre-treating his water with the sole intention of bringing it down to the mash pH he desired.

Rev.

I just did the same and immediately put it back on the shelf. Those few pages are full of errors and misconceptions not to mention the fact that there have been many 'discoveries' since that book was published which make it easy for the home brewer to tackle the water management tasks which CP suggests are way beyond him.correct... I actually dusted off my copy of "The New complete joy of home brewing the re reread the "advanced home brewing and water" chapter tonight... now i just need to figure out where and how to use the calculators and tools in brewers friend.

augiedoggy

Well-Known Member

I just did the same and immediately put it back on the shelf. Those few pages are full of errors and misconceptions not to mention the fact that there have been many 'discoveries' since that book was published which make it easy for the home brewer to tackle the water management tasks which CP suggests are way beyond him.

yeah I was wondering about that too... There seems to be a lot of outdated info in the book.

$172.35

2 Inch Tri Clamp Keg Manifold With Ball Lock Posts, Pressure Gauge, PRV (0-30 PSI) – Homebrew, Fermentation, Kegging System

wuhanshijiayangzhiyimaoyiyouxiangongsi

![Craft A Brew - Safale S-04 Dry Yeast - Fermentis - English Ale Dry Yeast - For English and American Ales and Hard Apple Ciders - Ingredients for Home Brewing - Beer Making Supplies - [1 Pack]](https://m.media-amazon.com/images/I/41fVGNh6JfL._SL500_.jpg)

$6.95 ($17.38 / Ounce)

$7.47 ($18.68 / Ounce)

Craft A Brew - Safale S-04 Dry Yeast - Fermentis - English Ale Dry Yeast - For English and American Ales and Hard Apple Ciders - Ingredients for Home Brewing - Beer Making Supplies - [1 Pack]

Hobby Homebrew

$58.16

HUIZHUGS Brewing Equipment Keg Ball Lock Faucet 30cm Reinforced Silicone Hose Secondary Fermentation Homebrew Kegging Brewing Equipment

xiangshuizhenzhanglingfengshop

$53.24

1pc Hose Barb/MFL 1.5" Tri Clamp to Ball Lock Post Liquid Gas Homebrew Kegging Fermentation Parts Brewer Hardware SUS304(Gas MFL)

Guangshui Weilu You Trading Co., Ltd

$53.24

1pc Hose Barb/MFL 1.5" Tri Clamp to Ball Lock Post Liquid Gas Homebrew Kegging Fermentation Parts Brewer Hardware SUS304(Liquid Hose Barb)

yunchengshiyanhuqucuichendianzishangwuyouxiangongsi

$176.97

1pc Commercial Keg Manifold 2" Tri Clamp,Ball Lock Tapping Head,Pressure Gauge/Adjustable PRV for Kegging,Fermentation Control

hanhanbaihuoxiaoshoudian

$33.99 ($17.00 / Count)

$41.99 ($21.00 / Count)

2 Pack 1 Gallon Large Fermentation Jars with 3 Airlocks and 2 SCREW Lids(100% Airtight Heavy Duty Lid w Silicone) - Wide Mouth Glass Jars w Scale Mark - Pickle Jars for Sauerkraut, Sourdough Starter

Qianfenie Direct

$7.79 ($7.79 / Count)

Craft A Brew - LalBrew Voss™ - Kveik Ale Yeast - For Craft Lagers - Ingredients for Home Brewing - Beer Making Supplies - (1 Pack)

Craft a Brew

$22.00 ($623.23 / Ounce)

AMZLMPKNTW Ball Lock Sample Faucet 30cm Reinforced Silicone Hose Secondary Fermentation Homebrew Kegging joyful

无为中南商贸有限公司

$33.95

Five Star - 6022b_ - Star San - 32 Ounce - High Foaming Sanitizer

Bridgeview Beer and Wine Supply

$10.99 ($31.16 / Ounce)

Hornindal Kveik Yeast for Homebrewing - Mead, Cider, Wine, Beer - 10g Packet - Saccharomyces Cerevisiae - Sold by Shadowhive.com

Shadowhive

Having used various pH meters for lab work I think it's more important to find a durable meter and stick with it. Even with high end bench meters it's unlikely to get two of them to read the same. This one of those cases where precision is better than accuracy.

In a pH meter accuracy and precision are, at least at the outset, the same thing. OK, now that I have your attention let's discuss what that really means and let me start by asking you the question "When a manufacturer specifies that his meter has accuracy of 0.05 pH, what does that mean?". I have seen lots of pH meter specs and in only one case did it say what that means. It refers to the stability of the electrode (and electronics but with modern digital implementations the instability of the electronics is insignificant compared to that of the electrode). If the meter can measure voltage and temperature to a certain precision then the least detectable difference in pH is ∆V/57 pH where ∆V is the precision in voltage measurement and 57.88 (20 °C) is a constant that depends on temperature. If, for example, it is 0.2 mV then ∆pH = .0034 pH and, we presume, that the manufacturer would display 0.1 pH resolution. We assume that 200 uV (0.2 mV) is the A/D resolution and that the electronic noise in this design would be 63 uV or less (10 db down). We also note that quantizing the display to 0.01 pH introduces quantizing noise of .0029 pH (0.01/sqrt(12)) in the readings.

We dip the electrode into 4 and 7 buffers and solve a pair of linear equations to come up with slope and offset numbers. The offset is added to the electrode voltage reading and the result is then gained by the offset number. If you do all this and then put the electrode back in 4 buffer (or leave it there while the calculations are being done) and nothing has changed the scaled offset voltage calculated by the meter will be (4.00221-7)*57.88 = 173.521 ~ 173.6 mV (0.2 mV precision). If moved to pH 7 buffer and nothing has changed the calculated scaled offset voltage will be (7.01624-7)*57.88 = -0.9398 ~ -1 mV assuming both buffers were at 20 °C. 4.00221 is the pH of the standard 4 buffer at 20 °C and 7.01624 that of the 7 buffer. Given 173.6 mV the meter divides by 57.88 and subtracts this from 7 to get 7 - 173.6/57.88 = 4.0069 which it rounds to two decimal places to show 4.00. Relative to the true buffer pH of 4.00221 this represents an error of 0.0022. Doing the same for the 7 buffer, the meter would display, to two decimal places, 7.02 while the true 7 buffer pH is 7.01264 for an error of 0.0010.

Thus, at calibration, the meter is dead on, to two decimal places with its accuracy set by its precision.

It would be well at this point to contemplate the fact that the typical NIST technical buffer is manufactured to a tolerance of ±0.02 pH and realize that it is, at calibration, really the buffers that determine the accuracy of the meter.

Now lets go away for 10 minutes and come back. What does the meter read now in 4 buffer? If the electrode is stable, i.e. it does not drift, it will still read 4.00. How about at 20 minutes, a half hour etc.? Any real electrode will, in fact drift and may be off by a few hundredths or, in a cheap meter, many hundredths of a pH unit. It is this drift which really determines the accuracy of which a pH meter is capable. A highly precise (you're going to have to talk to me a bit to convince me your meter should display 3 digits beyond the decimal point though quite a few do) meter that drifts is innacurate. This is why I put so much emphasis on the stability test described in the Sticky.

It is clear from all this that an 'accuracy' spec on a meter is useless unless the conditions (e.g. electrode in 4 buffer in a water bath) and time duration (e.g. over a period of 1 hour) are specified. As I said at the outset the only time I have ever seen this done is for the Hach pH Pro+.

Carlscan26

Well-Known Member

And if you want something which is more accurate then this

http://www.amazon.com/gp/product/B00W3OM3LA/?tag=skimlinks_replacement-20

Is accurate to .05 with a resolution of 0.01. Dial that in on a pH 4 or 5 NIST traceable buffer and you'll be well within any tolerable range for brewing

I'd avoid this meter.

1. The Amazon title says this: 0.05pH High Accuracy Pocket Size pH Meter with ATC and Backlit LCD, 0-14 pH Measurement Range, 0.01 Resolution Handheld, Measure Household Drinking Water(Yellow)

2. The summary says: Large LCD screen, glass probe, 0.05pH readout accuracy, 0.01 Resolution

3. The details list: Resolution: 0.1 PH

4. The manual says: Resolution 0.01 pH and Accuracy +/- .1 pH

So basically if you read 5.3 it could be 5.3 or 5.2 or 5.4. The hundredth's digit is worthless.

I may play with it tonight and see how stable it is but it's being sent back and I'm going to buy the MW102 or the Hach.

Last edited by a moderator:

I calibrate it with NIST traceable buffers and it consistently reads correctly and accurately when checked against our higher end models at work. When it dies I will get a better model with a replaceable probe, but as AJ notes above and in his other more detailed post on stability testing, the utility of the meter is more defined by how it behaves in reality.

Carlscan26

Well-Known Member

I calibrate it with NIST traceable buffers and it consistently reads correctly and accurately when checked against our higher end models at work. When it dies I will get a better model with a replaceable probe, but as AJ notes above and in his other more detailed post on stability testing, the utility of the meter is more defined by how it behaves in reality.

What you're saying is that it's stable and consistent. But what kind of resolution can you get out of it? Alter that solution by .1 and see if the meter registers the difference.

I was all in for trying it given the stated specs (which I admittedly glanced at) but once I had the manual in hand it really gave me a moment to pause and rethink my decision. If I'm going to go to the trouble to measure and adjust pH then I want to be doing it right.

nealm

Well-Known Member

I have to ask, in the grand scheme of things will it make a real difference in the beer if the ph is 5.2, 5.3 or 5.4. Is the goal to be precise or just to get into the proper range for good beer.

I have to ask, in the grand scheme of things will it make a real difference in the beer if the ph is 5.2, 5.3 or 5.4. Is the goal to be precise or just to get into the proper range for good beer.

For sure! Potentially profound differences. But it will still be beer. Having the understanding of what pH you prefer for particular styles and then having the ability to target them, can be a good thing for great beer.

Also if you are brewing a particularly light or dark beer, and take a mash pH that is inaccurate, you might end up trying to make an inappropriate correction to the pH which may be significantly detrimental.

For what it is worth.... I own a mw 102. I also own the Hach pocket pro +. The Hach is simply easier to use due to lack of unwieldy cables. It is more compact. I've already had to replace the mw probe once. It lasted about a year in spite of proper storage. The Hach manual didn't recommend any particular manner of storage (electrode submerged in storage solution for instance). I haven't used it much yet, having been on brewing hiatus since last February. Maybe used it three or four times before that. The goofy instructions that come with the Hach are not very helpful, but there is a user manual you can download which is better for learning how the meter works and how to properly calibrate. The mw meter seemed to have terrible drift between calibration and the time you took a measurement. I wouldn't trust the reading unless I immediately calibrated it then took a measurement. Towards the end of probe one lifetime, it took increasingly long time for it to calibrate, and the meter would "decide" when it was ready to use. The Hach was approximately similar in price as the Milwaukee when I bought mine, maybe a little more due to the S&H fees though.

For what it is worth.... I own a mw 102. I also own the Hach pocket pro +. The Hach is simply easier to use due to lack of unwieldy cables. It is more compact. I've already had to replace the mw probe once. It lasted about a year in spite of proper storage. The Hach manual didn't recommend any particular manner of storage (electrode submerged in storage solution for instance). I haven't used it much yet, having been on brewing hiatus since last February. Maybe used it three or four times before that. The goofy instructions that come with the Hach are not very helpful, but there is a user manual you can download which is better for learning how the meter works and how to properly calibrate. The mw meter seemed to have terrible drift between calibration and the time you took a measurement. I wouldn't trust the reading unless I immediately calibrated it then took a measurement. Towards the end of probe one lifetime, it took increasingly long time for it to calibrate, and the meter would "decide" when it was ready to use. The Hach was approximately similar in price as the Milwaukee when I bought mine, maybe a little more due to the S&H fees though.

The cabled vs pocket configuration argument is a potato/potahto thing IMO. All fancy lab instruments, of course, use a cabled arrangement.

As to storage of the Hach unit I think everyone now agrees (and this includes Hach who weren't really sure at the outset) that it should be stored upright (stick it in a coffee mug) with a few drops of water in the cap. Doesn't have to be RO or DI.

As to storage of the Hach unit I think everyone now agrees (and this includes Hach who weren't really sure at the outset) that it should be stored upright (stick it in a coffee mug) with a few drops of water in the cap. Doesn't have to be RO or DI.

augiedoggy

Well-Known Member

so I asked this in the beginning of the thread and most seem to agree .1 resolution is plenty? I think we are spitting hars here and if an experiment were to be done I highly doubt anyone could tell the difference between a beer with 5.2 mash and 5.3 or even 5.4 for that matter... I'd love to see some sort of evidence to state either way though.I'd avoid this meter.

1. The Amazon title says this: 0.05pH High Accuracy Pocket Size pH Meter with ATC and Backlit LCD, 0-14 pH Measurement Range, 0.01 Resolution Handheld, Measure Household Drinking Water(Yellow)

2. The summary says: Large LCD screen, glass probe, 0.05pH readout accuracy, 0.01 Resolution

3. The details list: Resolution: 0.1 PH

4. The manual says: Resolution 0.01 pH and Accuracy +/- .1 pH

So basically if you read 5.3 it could be 5.3 or 5.2 or 5.4. The hundredth's digit is worthless.

I may play with it tonight and see how stable it is but it's being sent back and I'm going to buy the MW102 or the Hach.

Carlscan26

Well-Known Member

so I asked this in the beginning of the thread and most seem to agree .1 resolution is plenty? I think we are spitting hars here and if an experiment were to be done I highly doubt anyone could tell the difference between a beer with 5.2 mash and 5.3 or even 5.4 for that matter... I'd love to see some sort of evidence to state either way though.

I am more concerned with accuracy than resolution. An accuracy of .1 is too wide of a margin (2 points) when we're trying to stay in a 4 point window.

augiedoggy

Well-Known Member

I am more concerned with accuracy than resolution. An accuracy of .1 is too wide of a margin (2 points) when we're trying to stay in a 4 point window.

See I am more concerned with this resolution having an actual effect on on the beer... and it really seems its a moot point if just used for brewing... If not someone has to have collected data on this to state otherwise right? or are people just making assumptions either way here?

We seem to have to opinions here... Those with expensive high end meters that say it matters and those with cheaper meters (and one or two with better meters) that say its not that critical since there is a range we want to hit and other factors such as the grainbill which will throw off our eact numbers even if we had them. No one seems to have anything to prove either opinion though.. Unless I missed it?

so I asked this in the beginning of the thread and most seem to agree .1 resolution is plenty?

I would emphatically disagree with that. Precision and accuracy are related. In setting precision one introduces quantizing noise equal to the precision divided by sqrt(12). Thus a meter that displays to 0.01 has introduced 0.00288 pH quantizing noise and one that displays to 0.1 has 0.0288 pH (rms in both cases) quantizing noise. A good designer does not throw away appreciable accuracy and the general rule of thumb is that quantizing noise should be at least 10 dB (factor of sqrt(10) = 3.16228) below the other system noises. Thus if one is to limit his display to .01 resolution the other system 'noises' (errors) are presumed to be 0.00288*3.16228 = 0.0091 pH. In fact a good meter using NIST traceable 4 an 7 ±0.02 buffers is capable of delivering about 0.0141 pH error at pH 5.5 (half way between the two buffers and, when using ±0.01 buffers about half that. In this case .01 resolution is justified and, unless the inherent accuracy of the meter is to be thrown away, necessary.

Now if the intrinsic accuracy of the meter is 10 times this, i.e. 0.141 a quantizing noise level of 0.00228 is 20*log(0.141/0.00228) = 35.8 dB below that and we are wasting money on a 0.01 display as the quantizing noise from an 0.1 display is 20*log(.141/.0288) = 15.8 db (more than 10 dB below the inherent noise of the meter. Thus the presence of an 0.1 precision display says that the basic accuracy of the meter is about 0.1 pH.

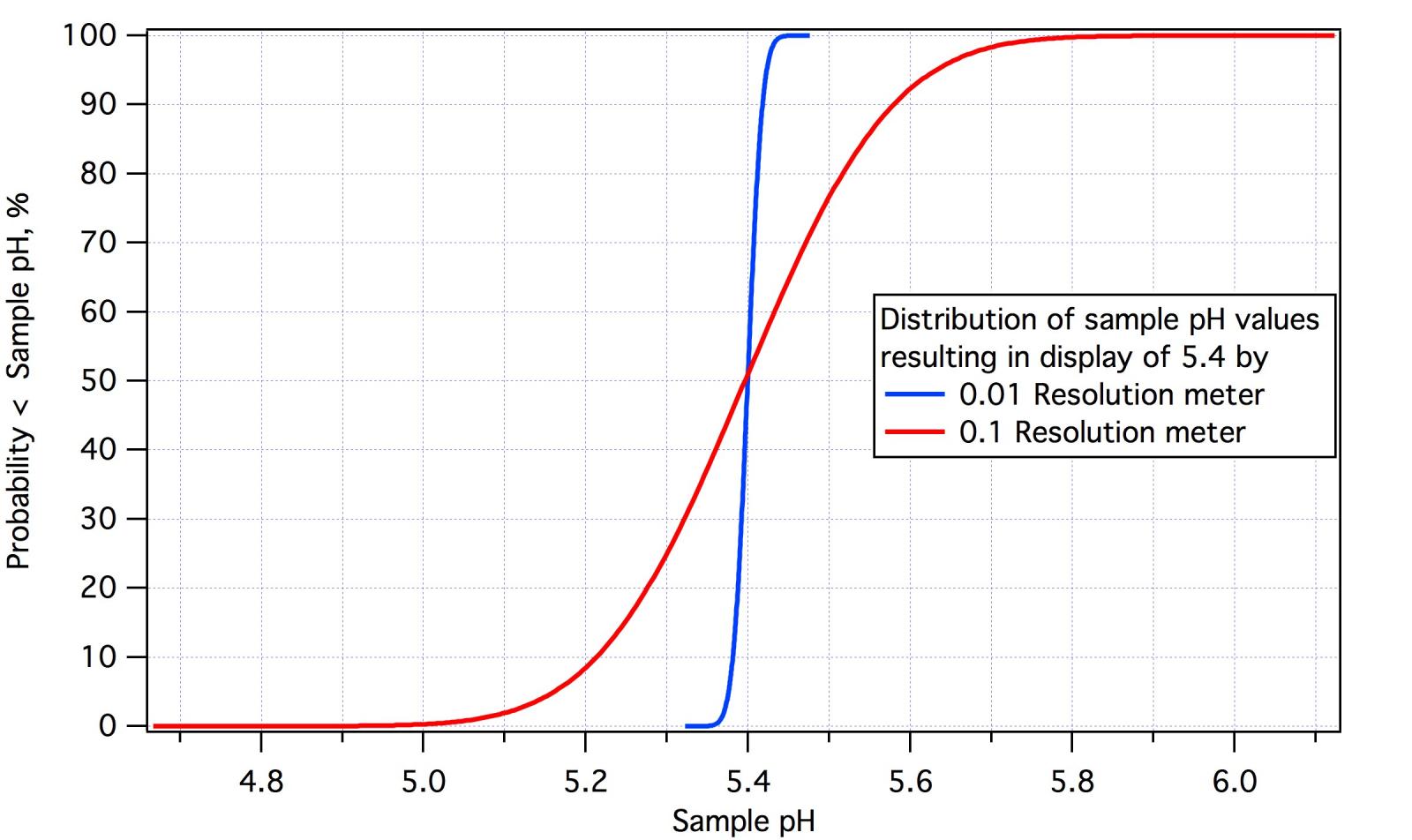

The implications of this are seen in the graph which shows the distribution of true solution pH's which would cause a reading of 5.4 or 5.40 on, respectively, .1 and 0.01 resolution meters. The inherent accuracyof the 0.01 meter is 0.014 as that is about what one can expect at mash pH with ±0.02 buffers and, for the 0.1 meter is 10 times that as design practice dictates that this would be the level appropriate for a 0.1 meter. The percentages on the left axis are the probabilities that the pH of the sample is less than the pH indicated on the horizontal axis. Thus the probability that the actual sample pH is less than 5.4 is 50% for either meter. For the 0.1 meter (red curve) there is a 25% probability that the actual pH is less that 5.3 and a 75% chance that it is greater than 5.5 which means a 25% chance that it is greater than 5.5 or a 50% chance that it is between 5.3 and 5.5. There is also a 10% chance that the actual pH may be less than 5.2 and a 10% chance that it may be greater than 5.58. This should make it clear why 0.1 resolution is not acceptable to a brewer. 5.4 on the display means the actual pH could be between 5 and 5.8 though it is most likely (82%) to be between 5.2 and 5.6. Conversely with 0.01pH resolution a reading of 5.4 means the pH is between 5.38 and 5.42 with 80% probablity.

I think we are spitting hars here and if an experiment were to be done I highly doubt anyone could tell the difference between a beer with 5.2 mash and 5.3 or even 5.4 for that matter... I'd love to see some sort of evidence to state either way though.

It has certainly been my experience that there is great difference between a lager brewed at mash pH of 5.65 and 5.45 and a less striking, but nevertheless discernable ones for differences of 0.1.

We seem to have to opinions here... Those with expensive high end meters that say it matters and those with cheaper meters (and one or two with better meters) that say its not that critical since there is a range we want to hit and other factors such as the grainbill which will throw off our eact numbers even if we had them. No one seems to have anything to prove either opinion though.. Unless I missed it?

A brewer with a digital thermometer that had a resolution of 5 °F ( i.e. 120, 125, 130... are the only possible readings) could well argue that there is nothing wrong with the beers he makes with it. That's because he has not experienced the better beers that he could make with a thermometer with 1 °F resolution.

It is not sufficient to be between 5.2 and 5.6. Each type of beer has an appreciably narrower range that produces the best results. When we say 5.4 - 5.6 is good for lagers we don't mean you can be anywhere in that range. We mean you should shoot for that range to start and then find the point within it that gives the most pleasing results.

Carlscan26

Well-Known Member

AJ - that is some impressive analysis and explanation!

It is not sufficient to be between 5.2 and 5.6. Each type of beer has an appreciably narrower range that produces the best results. When we say 5.4 - 5.6 is good for lagers we don't mean you can be anywhere in that range. We mean you should shoot for that range to start and then find the point within it that gives the most pleasing results.

Not disagreeing with you in any way, I'd just like to pose a question I am curious about. Before the advent of modern pH readings, especially those of modern day accuracy, how did all other producers of (in this case just for the quote) lagers manage to produce their beers within a specific "ideal" range and also keep them in that range given any natural variances?

Again, not posing this question to debate your point as I don't disagree, I'm really just curious to know how these things could've been kept fairly in line before modern tech or were they just not kept in line and their beers often varied dramatically?

Rev.

I'm really just curious to know how these things could've been kept fairly in line before modern tech or were they just not kept in line and their beers often varied dramatically?

Perhaps 'not sufficient' was too strong. I made passable, even good, beer before I started controlling pH but it wasn't until I did that I started making very good beer. Certainly beer had been made for thousands of years before the concept of pH was even introduced early in the 20th century and clearly those brewers did not monitor pH with a pH meter. But those brewers, just based on observation with nothing but their senses, were able to formulate recipes and brewing practices that produced, over time, beers that were better than other beers though I am sure that they were much more variable than they are over time.

Why is a dark beer dark? Presumably because the brewers of that beer recognized that it tasted better when a certain amount, but not too much, dark malt was added to the grist. Without fully realizing what they were doing, the brewers that used this dark malt were controlling for pH. Perhaps they were even sophisticated enough to know that the amount of dark malt should be adjusted for each barley harvest, each batch of malt or seasonal variations in water supply. This is speculation of course. I was not there!

Remember that there was industrial brewing in a time when neither thermometers nor hydrometers were available either.

Similar threads

- Replies

- 0

- Views

- 805