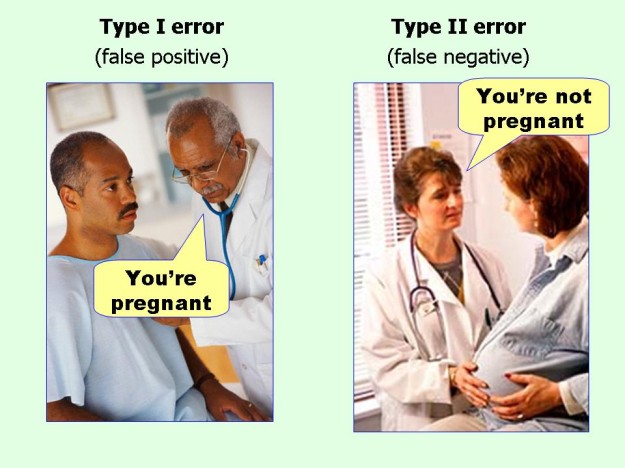

Here is more data on the first 33 Brulosophy experiments. It includes the ones I posted earlier. I've added at the end of each data line a I if the experiment was significant to the 0.05 with respect to Type I errors and a II if it was significant to the 0.05 level with respect to Type II error assuming differentiability of 0.2.

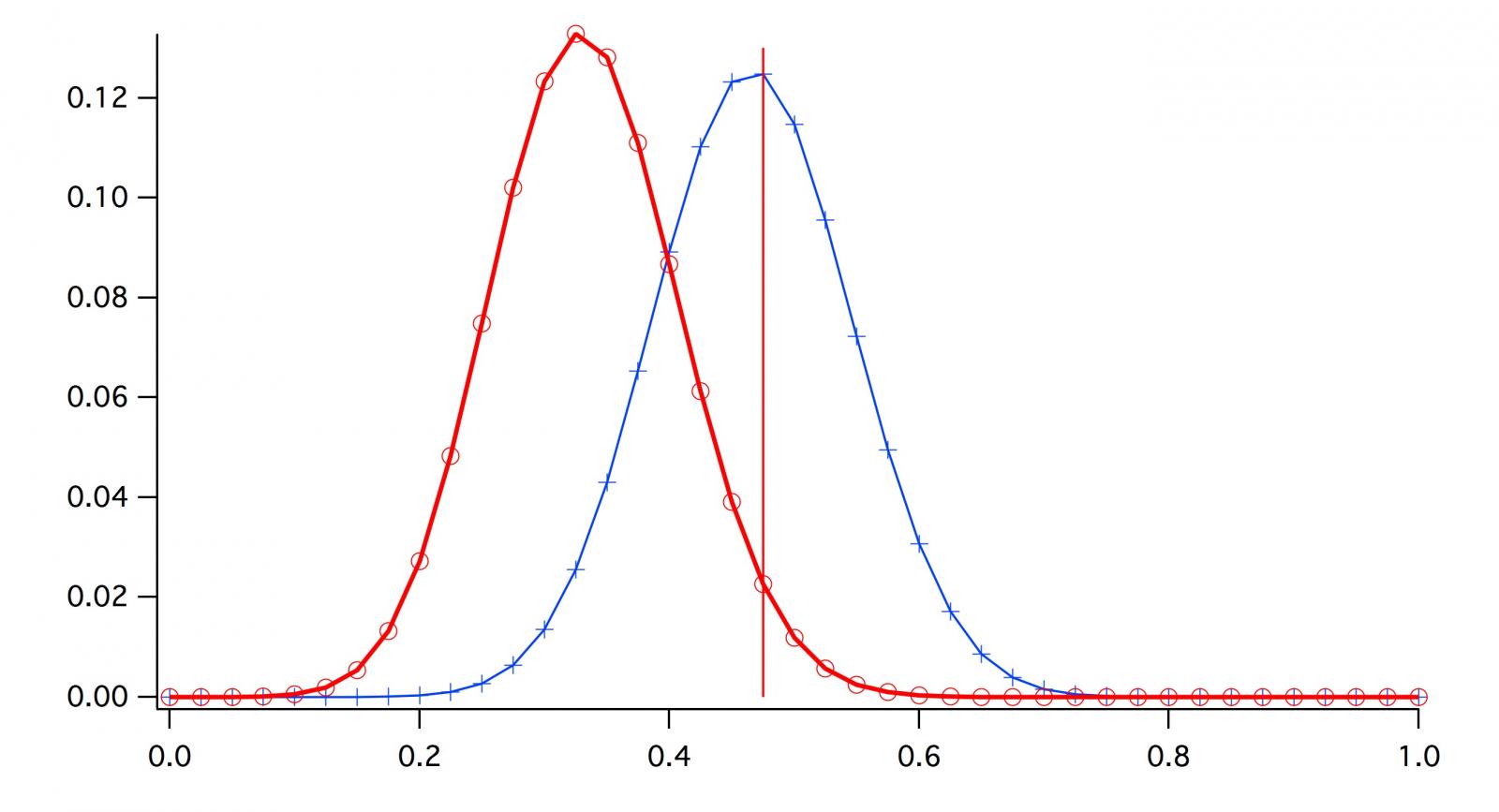

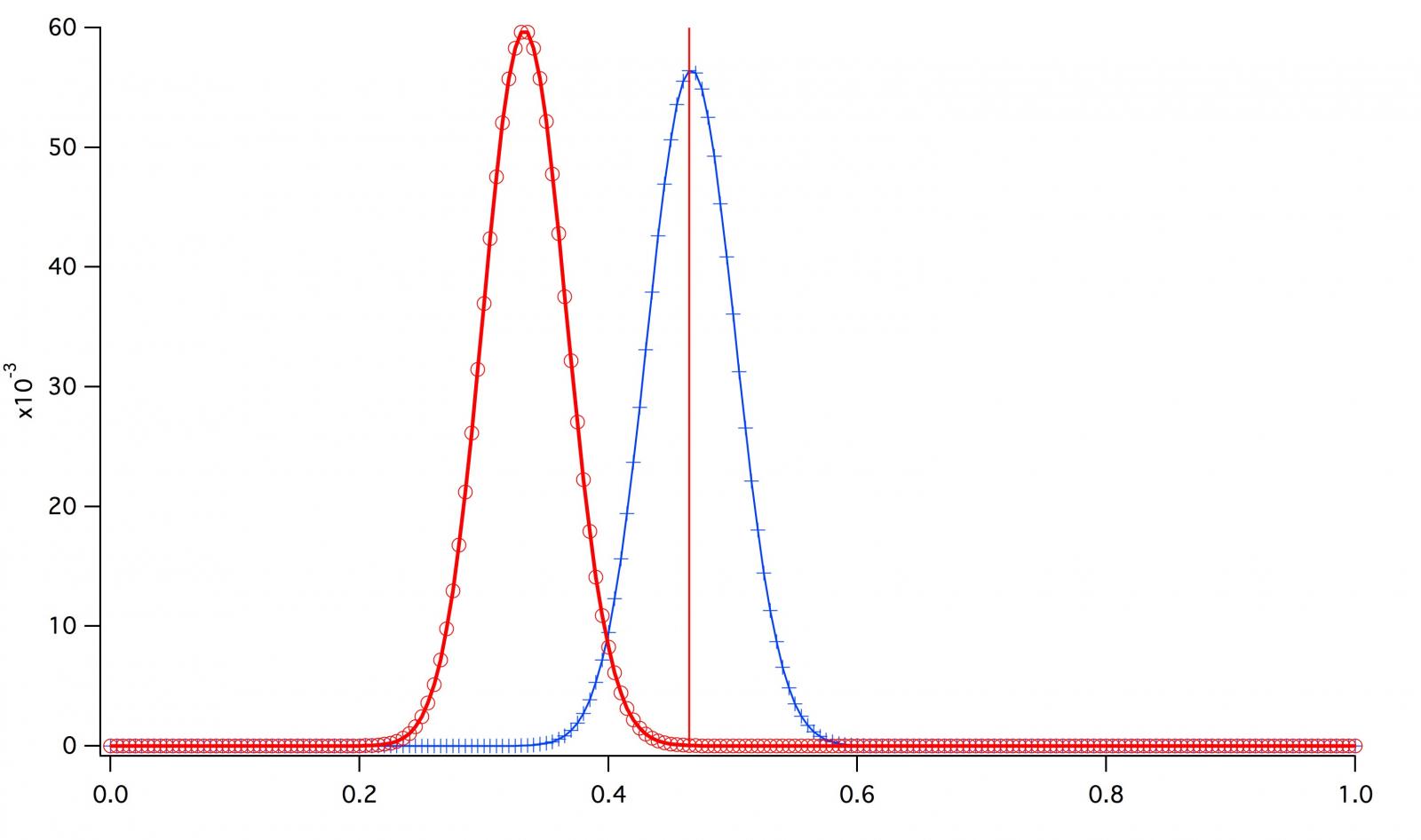

Of these 33 experiments 12 (36%) were significant with respect to Type I error (the only type the experimenters considerd) meaning that the hypothesis that tasters could tell the difference could be confidently accepted. But another 6 (18%) were significant with Type II error. The investigators statements about these tests is exemplified by their comments with respect to the last test in the data table below:

...suggesting participants in this xBmt were unable to reliably distinguish a beer fermented with GY054 Vermont IPA yeast from one fermented with WY1318 London Ale III yeast.

That's not a suggestion. There is good reason to believe that the beers are indistinguishable.

The following information is being shared purely to appease the curious and should not be viewed as valid data given the failure to achieve significance.

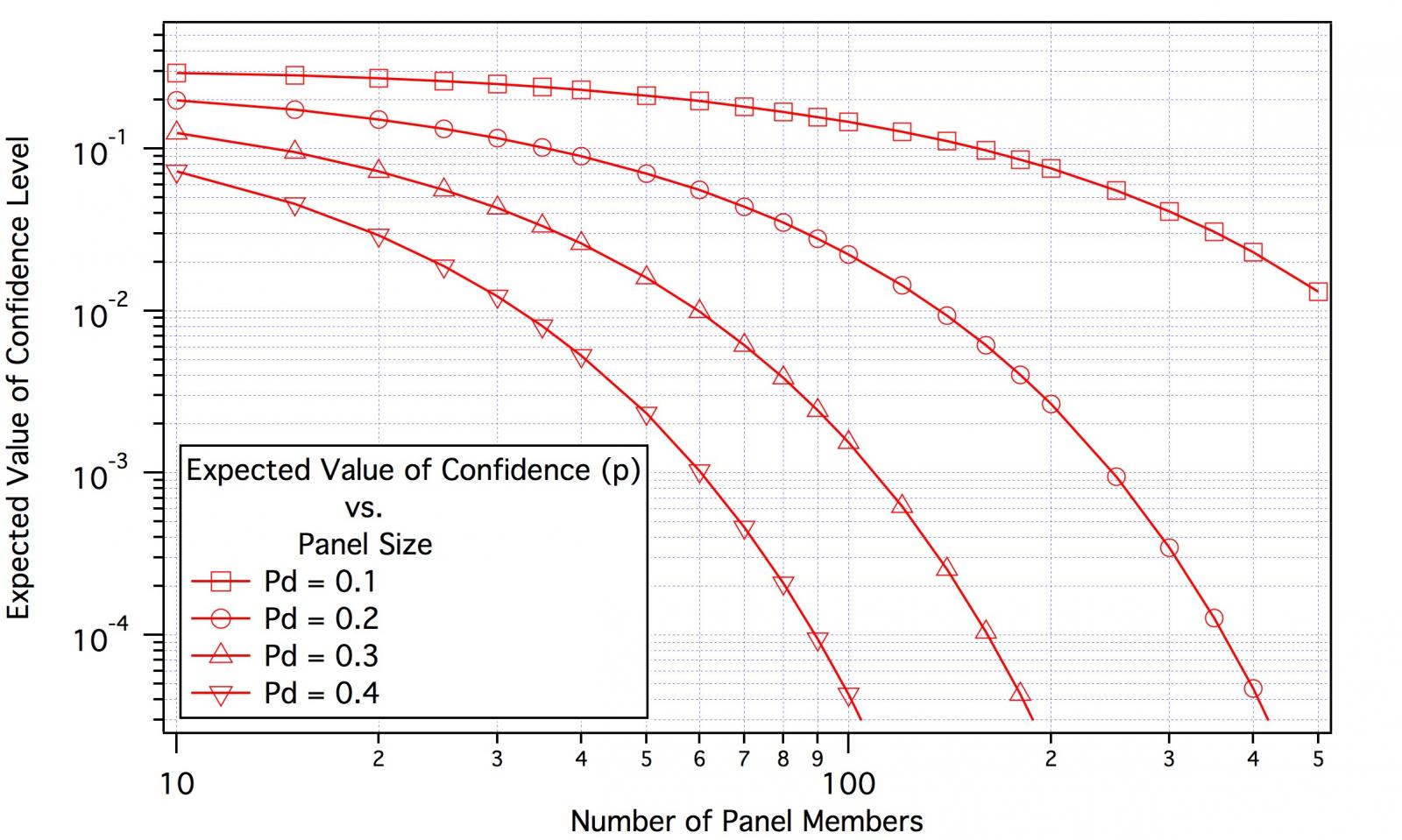

The experiment did acheive significance at the 0.04 level. We can say, with confidence at that level, that no more than 20% of the population represented by the taste panel would be able to distinguish beers brewed with these two yeasts. We can also say with 0.002 confidence that no more than 30% can. But our confidence that no more than 10% can tell the difference zooms to 28%

In many cases it's clear that a simple increase in the panel size would have yielded statistical significance. It is a real pity that these guys went to all this work (there are pages of these things) without consulting a statistician. These experiments could have been a goldmine to those who wish to ignore the statistical aspects (take them for what they are, not what we want them to be) but also to those who want to understand what they really mean. Even so there is quite a bit of usable data to mine. IMO if these folks want to go forward they should immediately obtain the standard and refrain from the practices which flagrantly violate it such as presenting two of one type of beer and one of the other and serving the beers in different colored cups. They should also use the Table in the standard to pick panel size.

Does this "professional" brewer think these experiments are a load of BS? No though he does see problems with them.

Experiment ; Panelists ; No. Correct ; p Type I ; p Type II ; MLE Pd ; Z ; SD ; Lower Lim ; Upper Lim

Hochkurz ; 26 ; 7 ; 0.8150 ; 0.0118 ; 0.000 ; 1.645 ; 0.130 ; 0.000 ; 0.215 II

Roasted Part 3 ; 24 ; 10 ; 0.2538 ; 0.2446 ; 0.125 ; 1.645 ; 0.151 ; 0.000 ; 0.373

Hop Stand ; 22 ; 9 ; 0.2930 ; 0.2262 ; 0.114 ; 1.645 ; 0.157 ; 0.000 ; 0.372

Yeast Comparison ; 32 ; 15 ; 0.0777 ; 0.4407 ; 0.203 ; 1.645 ; 0.132 ; 0.000 ; 0.421

Water Chemistry ; 20 ; 8 ; 0.3385 ; 0.2065 ; 0.100 ; 1.645 ; 0.164 ; 0.000 ; 0.370

Hop Storage ; 16 ; 4 ; 0.8341 ; 0.0207 ; 0.000 ; 1.645 ; 0.162 ; 0.000 ; 0.267 II

Ferm. Temp Pt 8 ; 20 ; 8 ; 0.3385 ; 0.2065 ; 0.100 ; 1.645 ; 0.164 ; 0.000 ; 0.370

Loose vs. bagged ; 21 ; 12 ; 0.0212 ; 0.7717 ; 0.357 ; 1.645 ; 0.162 ; 0.091 ; 0.624 I

Traditonal vs. short ; 22 ; 13 ; 0.0116 ; 0.8301 ; 0.386 ; 1.645 ; 0.157 ; 0.128 ; 0.645 I

Post Ferm Ox Pt 2 ; 20 ; 9 ; 0.1905 ; 0.3566 ; 0.175 ; 1.645 ; 0.167 ; 0.000 ; 0.449

Dry Hop at Yeast Pitch ; 16 ; 6 ; 0.4531 ; 0.1624 ; 0.063 ; 1.645 ; 0.182 ; 0.000 ; 0.361

Flushing w/ CO2 ; 41 ; 15 ; 0.3849 ; 0.0725 ; 0.049 ; 1.645 ; 0.113 ; 0.000 ; 0.234

Boil Vigor ; 21 ; 11 ; 0.0557 ; 0.6215 ; 0.286 ; 1.645 ; 0.163 ; 0.017 ; 0.555

Butyric Acid ; 16 ; 9 ; 0.0500 ; 0.6984 ; 0.344 ; 1.645 ; 0.186 ; 0.038 ; 0.650 I

BIAB; Squeezing ; 27 ; 8 ; 0.7245 ; 0.0228 ; 0.000 ; 1.645 ; 0.132 ; 0.000 ; 0.217 II

Dry Hop Length ; 19 ; 3 ; 0.9760 ; 0.0010 ; 0.000 ; 1.645 ; 0.125 ; 0.000 ; 0.206 II

Fermentatopm Temp Pt 7 ; 22 ; 11 ; 0.0787 ; 0.5415 ; 0.250 ; 1.645 ; 0.160 ; 0.000 ; 0.513

Water Chemistry Pt. 8 ; 22 ; 8 ; 0.4599 ; 0.1178 ; 0.045 ; 1.645 ; 0.154 ; 0.000 ; 0.298

The Impact Appearace Has ; 15 ; 7 ; 0.2030 ; 0.4006 ; 0.200 ; 1.645 ; 0.193 ; 0.000 ; 0.518

Stainless vs Plastic ; 20 ; 6 ; 0.7028 ; 0.0406 ; 0.000 ; 1.645 ; 0.154 ; 0.000 ; 0.253 II

Yeast US-05 vs. K-97 ; 21 ; 15 ; 0.0004 ; 0.9806 ; 0.571 ; 1.645 ; 0.148 ; 0.328 ; 0.815 I

LODO ; 38 ; 25 ; 0.0000 ; 0.9863 ; 0.487 ; 1.645 ; 0.115 ; 0.297 ; 0.677 I

Yeast WLP001 vs. US-05 ; 23 ; 15 ; 0.0017 ; 0.9424 ; 0.478 ; 1.645 ; 0.149 ; 0.233 ; 0.723 I

Whirlfloc ; 19 ; 9 ; 0.1462 ; 0.4354 ; 0.211 ; 1.645 ; 0.172 ; 0.000 ; 0.493

Yeast: Wyeast 1318 vs 1056 ; 21 ; 12 ; 0.0212 ; 0.7717 ; 0.357 ; 1.645 ; 0.162 ; 0.091 ; 0.624 I

Headspace ; 20 ; 11 ; 0.0376 ; 0.7002 ; 0.325 ; 1.645 ; 0.167 ; 0.051 ; 0.599 I

Yeast US-05 vs. 34/70 ; 34 ; 25 ; 0.0000 ; 0.9986 ; 0.603 ; 1.645 ; 0.113 ; 0.416 ; 0.790 I

Hops: Galaxy vs. Mozaic ; 38 ; 17 ; 0.0954 ; 0.3456 ; 0.171 ; 1.645 ; 0.121 ; 0.000 ; 0.370

Storage Temperature ; 20 ; 12 ; 0.0130 ; 0.8342 ; 0.400 ; 1.645 ; 0.164 ; 0.130 ; 0.670 I

Yeast Pitch Temp Pt. 2 ; 20 ; 15 ; 0.0002 ; 0.9903 ; 0.625 ; 1.645 ; 0.145 ; 0.386 ; 0.864 I

Corny vs. Glass Fermenter ; 29 ; 16 ; 0.0126 ; 0.7682 ; 0.328 ; 1.645 ; 0.139 ; 0.100 ; 0.555 I

Brudragon: 1308 vs GY054 ; 75 ; 28 ; 0.2675 ; 0.0405 ; 0.060 ; 1.645 ; 0.084 ; 0.000 ; 0.198 II

It is not like I'm not putting 4 or 5 hours aside on brew day and I have always mashed for at least an hour, usually longer because I forget to get my strike water hot early. Anyway, thanks for the reminder.

Question: If I want more unfermentable sugars is a shorter mash an option? I understand a higher mash temp (156-160f) does this, but it would be good to know.