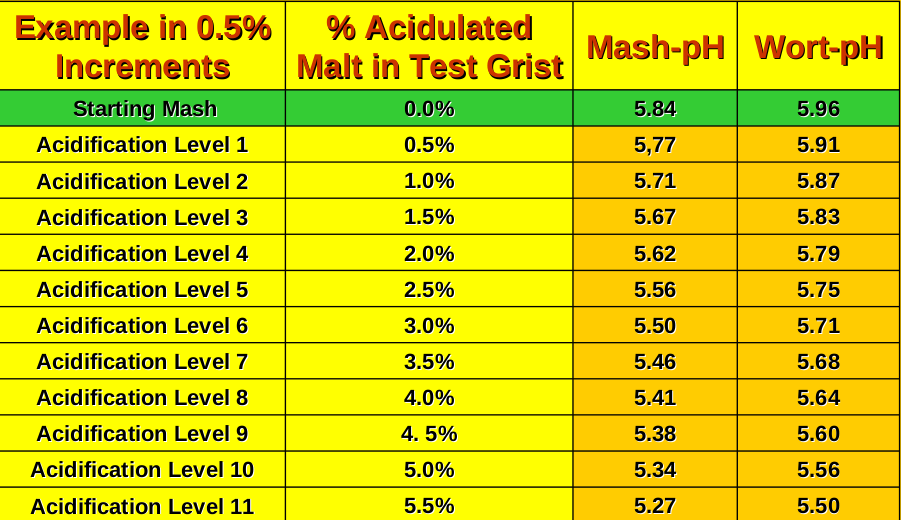

What ATC does do is assure you that if you measure pH at 66 degrees C. and find it to be 5.27, that is truly the worts pH at 66 degrees C., and if you subsequently cool the very same wort sample to 20 degrees C. and measure it again, and this time your meter reads 5.50 pH, that is also its true pH. The bottom line is that pH's are naturally lower at higher temperatures. But for a pH meter to accurately indicate pH's at differing temperatures (with pH's displayed "as they are", and not "as you would like them to be") the "slope" of the internal formula used by the meter must be changed to make the meter agree with the temperature. All that 'ATC' does is change this slope so you don't have to correct for an incorrect slope.

This is a challenging concept for many home brewers to get their heads around because they naturally think that the temperature correction (ATC) solves the problem. In fact there are two problems when comparing pH's at different temperatures:

1.

Temperature effects the way your device reads pH. A correction needs to be made to normalize (or normalise if you're zed-phobic) temperatures to a standard (e.g., 68° F). This correction is fairly straightforward to understand and parallels how we think about correcting S.G. based on temperature.

2.

Temperature can also directly change the pH of a sample. The ATC correction does not account for the fact that the pH of mash at mash temperatures will be lower than when that mash sample is allowed to cool to room temperature. The problem, is that this effect is also a temperature effect, and pH meters advertise built-in temperature correction. So it's easy to see how people misunderstand this:

"Wait, I bought a meter that temperature-corrects my 150° mash pH, but now you're telling me that I still need to let it cool down to room temperature before measuring the pH?"

The best way I've found to explain this to people is to do the following experiment the next time that you brew:

1. Measure the mash pH at mash temp, but put the temperature probe in room temperature water (and the pH probe in the mash). This is effectively what would happen if you had no temperature correction (ATC) on your meter. It's an incorrect reading because the device is doing its internal calibration incorrectly because you tricked the device into thinking the sample is at room temp.

2. Now measure the mash pH at mash temp as you normally would by putting both temp and pH probes in the hot mash. This reading (e.g., 5.30) is a

temperature-corrected reading. It is an internal correction that is specific to the meter. This reading puts you on a level playing field with scientists all across the world who use ATC when reporting pH. And, and this is important, if temperature did not directly alter the pH of your mash, you could simply use this value as a true pH reading.

3. Let the same mash sample cool to 68° F. Put the temp probe and pH probe in this cooled sample and measure. What you are likely to discover is that the pH now reads higher (e.g., 5.5). Why? Because temperature changes the true pH value. In this case, heating a sample of wort is figuratively the same as adding some acid to it. And your ATC pH meter has no knowledge of what you're sticking it in. It doesn't know whether temperature fundamentally changes the pH of your mash, or aquarium water, or your hot tub. It just knows how to mechanically correct itself to a standard 68°F.

In short, if you want a mash pH of 5.3 at 68° F, you must measure your mash sample at 68° F even if using an ATC pH meter.

![Craft A Brew - Safale BE-256 Yeast - Fermentis - Belgian Ale Dry Yeast - For Belgian & Strong Ales - Ingredients for Home Brewing - Beer Making Supplies - [3 Pack]](https://m.media-amazon.com/images/I/51bcKEwQmWL._SL500_.jpg)