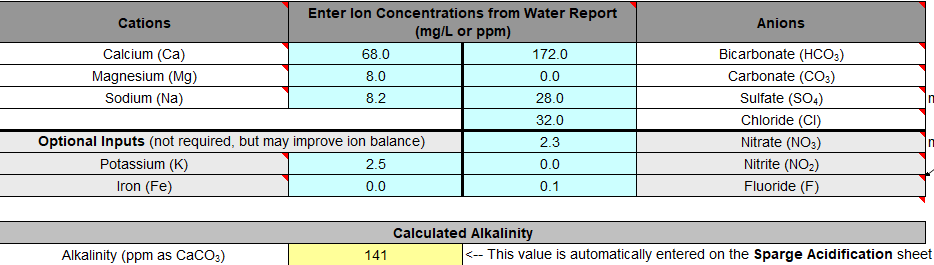

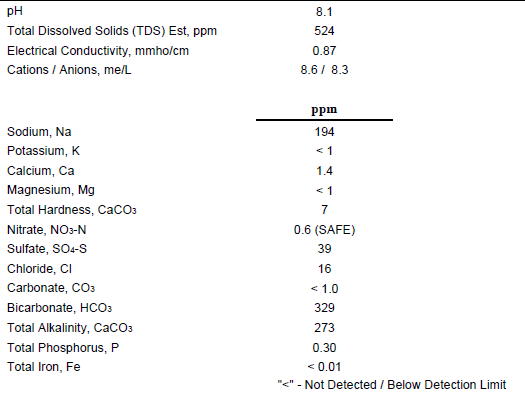

Recently got my water tested, results are below.

Had a couple thoughts after the fact that I really should have thought of before sending my water sample in.

1. Does the Bru'n water spreadsheet account for using 10 gallons of water to make 5 gallons of beer? For ex. if my sodium level is 194 ppm, and I turn 10 gallons of water into 5 gallons, is my sodium level now more concentrated (simplifying the question as its not exactly 10 to 5, as there is water absorption by the malt, etc)? Am I overthinking that?

2. Is there any sort of a standard for how much sodium water softeners add? Instead of cutting my tap water down with RO or distilled, I could add a splitter before my water softener to get less salty water.

3. I didn't take a sample of water that had been Camden-tabletized.... anyone have math as to how that changes water chemistry?

Thanks in advance!

Had a couple thoughts after the fact that I really should have thought of before sending my water sample in.

1. Does the Bru'n water spreadsheet account for using 10 gallons of water to make 5 gallons of beer? For ex. if my sodium level is 194 ppm, and I turn 10 gallons of water into 5 gallons, is my sodium level now more concentrated (simplifying the question as its not exactly 10 to 5, as there is water absorption by the malt, etc)? Am I overthinking that?

2. Is there any sort of a standard for how much sodium water softeners add? Instead of cutting my tap water down with RO or distilled, I could add a splitter before my water softener to get less salty water.

3. I didn't take a sample of water that had been Camden-tabletized.... anyone have math as to how that changes water chemistry?

Thanks in advance!

![Craft A Brew - Safale S-04 Dry Yeast - Fermentis - English Ale Dry Yeast - For English and American Ales and Hard Apple Ciders - Ingredients for Home Brewing - Beer Making Supplies - [1 Pack]](https://m.media-amazon.com/images/I/41fVGNh6JfL._SL500_.jpg)